echo "Hello Nginx" > /usr/share/nginx/html/index.html

Nginx Plus Use Case

基础配置

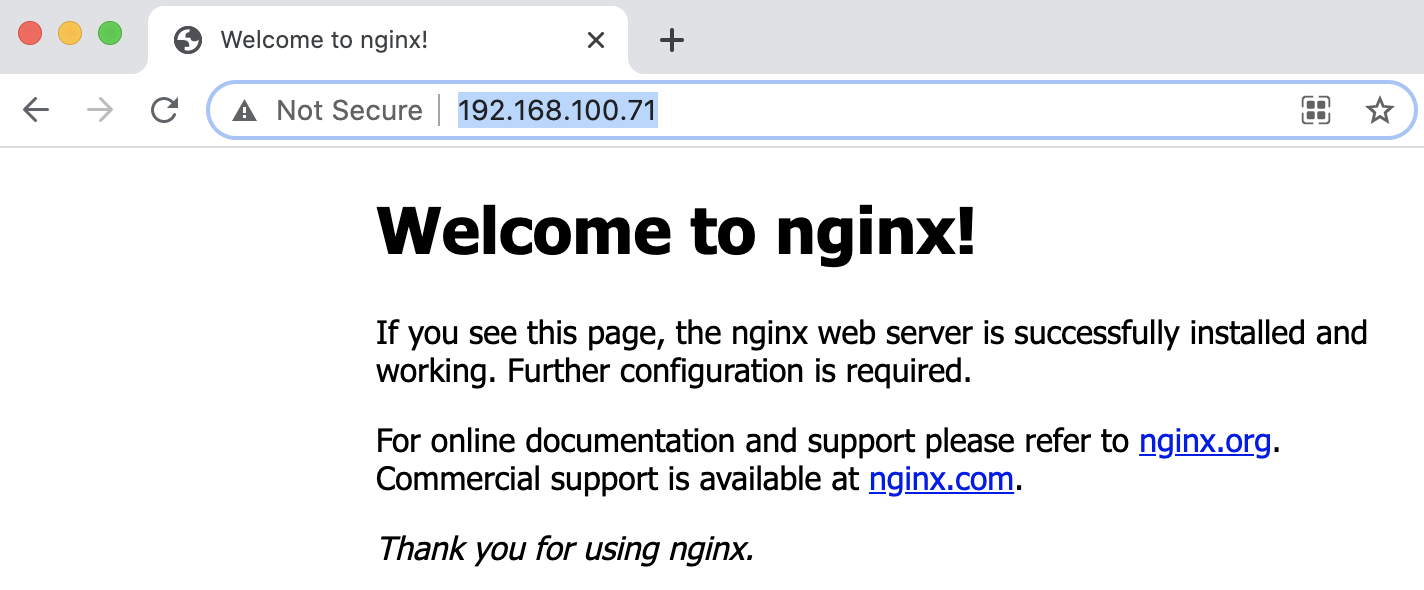

自定义欢迎页面

| ITEM | NOTE |

|---|---|

目的 |

验证自定制默认欢迎页面 |

步骤 |

echo "ok" > /usr/share/nginx/html/hello |

结果 |

访问默认欢迎页面

访问定制测试页面

|

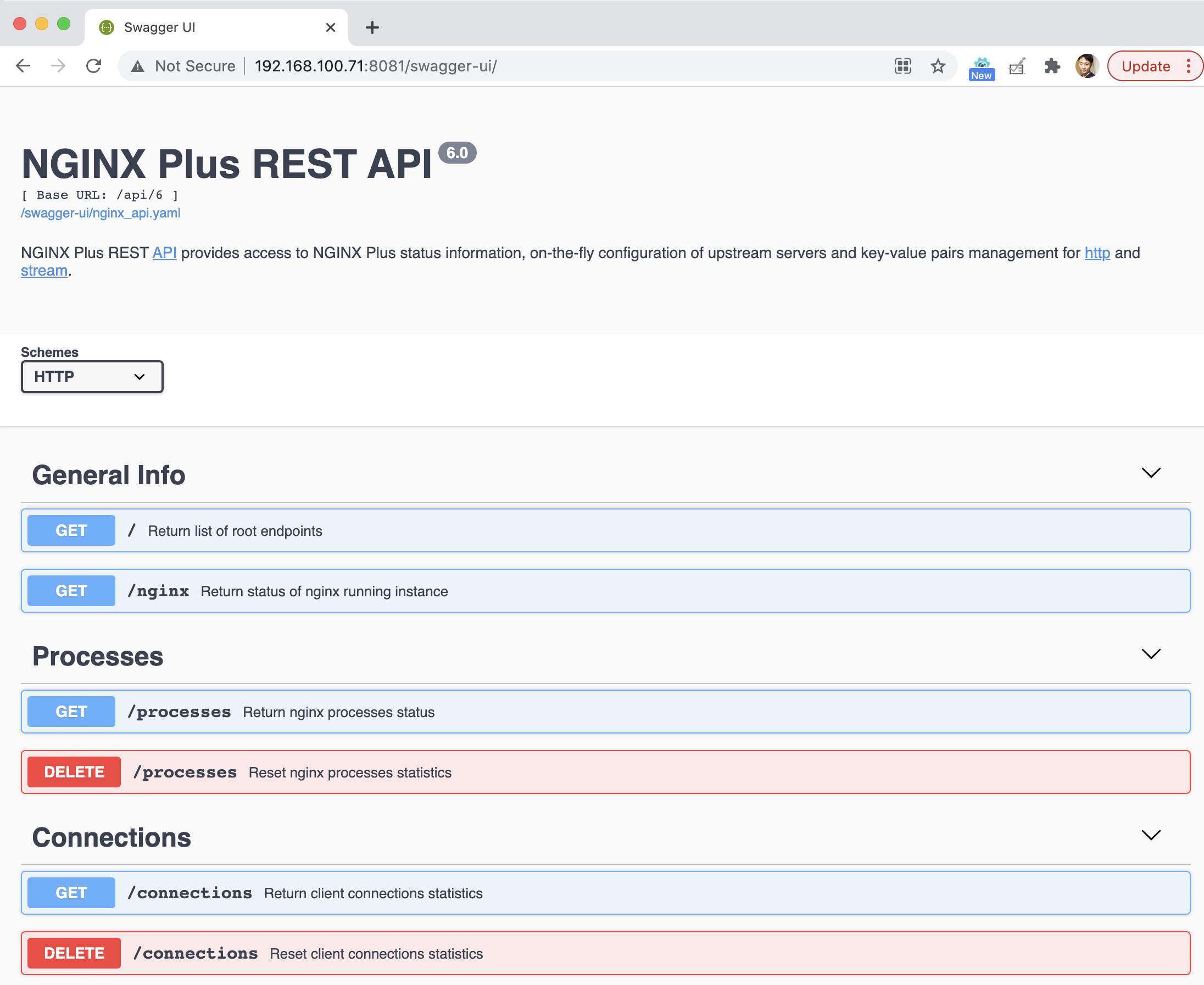

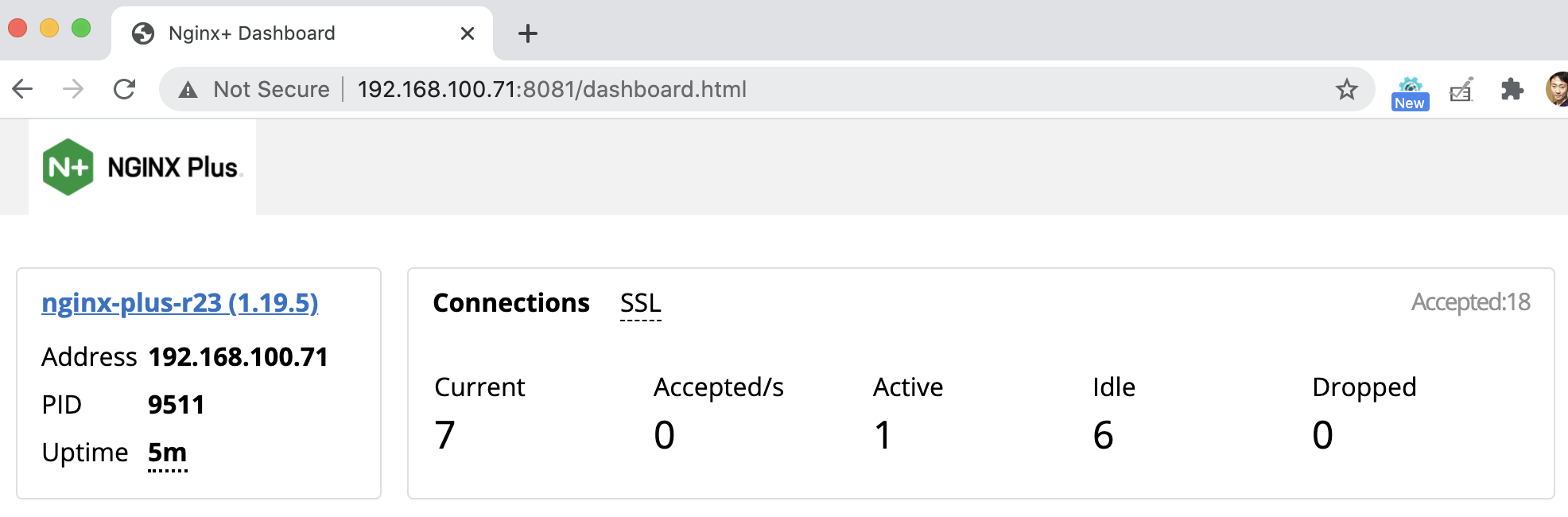

API 及 Dashboard 能力

| ITEM | NOTE |

|---|---|

目的 |

Nginx Plus 具备 API 能力以及 Dashboard 能力,可视化展示 Nginx Plus 自身及应用交付的状态。 |

步骤 |

备份默认配置

新建 default.conf 文件

重新加载

|

结果 |

负载均衡

流量转发中的行为

| ITEM | NOTE |

|---|---|

空值报文头属性默认被丢弃 |

传递空的 X-Forwarded-For HTTP 头访问,通过应用查看 HTTP 请求头

传递非空的 X-Forwarded-For HTTP 头访问,通过应用查看 HTTP 请求头

|

包含下划线”_”的报文头将会被静默丢弃 |

传递包含下划线的报文头属性访问服务,通过应用查看 HTTP 请求头

在 Server 中配置

underscores_in_headers on后再次执行上面操作如下配置是否工作?

|

Host 报文头替换 |

直接访问服务,Host 值为 10.1.10.8:8080

通过代理访问服务,Host 值修改为 forwardhttp,Host报文头会被重写为$proxy_host变量值,该变量值为proxy_pass指令中所包含的域名或 ip 地址

|

Conntection报文头将会被修改为 close,HTTP 1.1 将会被修改为 HTTP 1.0 |

浏览器直接访问服务,Conntection 及 Protocol 信息如下

浏览器通过代理访问服务,Conntection 及 Protocol 信息如下

|

服务器获取真实客户端IP

| ITEM | NOTE |

|---|---|

目的 |

Nginx Plus 下服务器端如何获取真实客户端 IP |

操作 |

配置

访问测试

访问测试

|

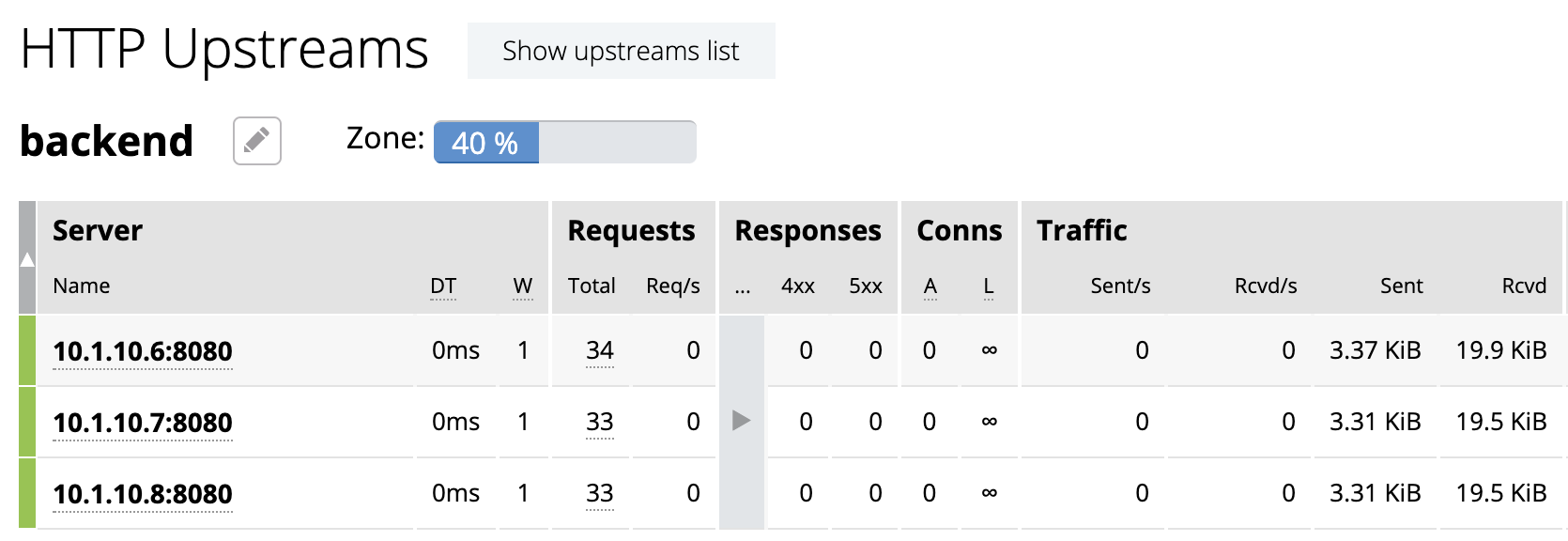

轮询负载算法

| ITEM | NOTE |

|---|---|

目的 |

Nginx Plus 支持轮询的调度算法 |

步骤 |

默认算法为轮询,新建配置文件rr.conf

重新加载

|

结果 |

命令行访问测试

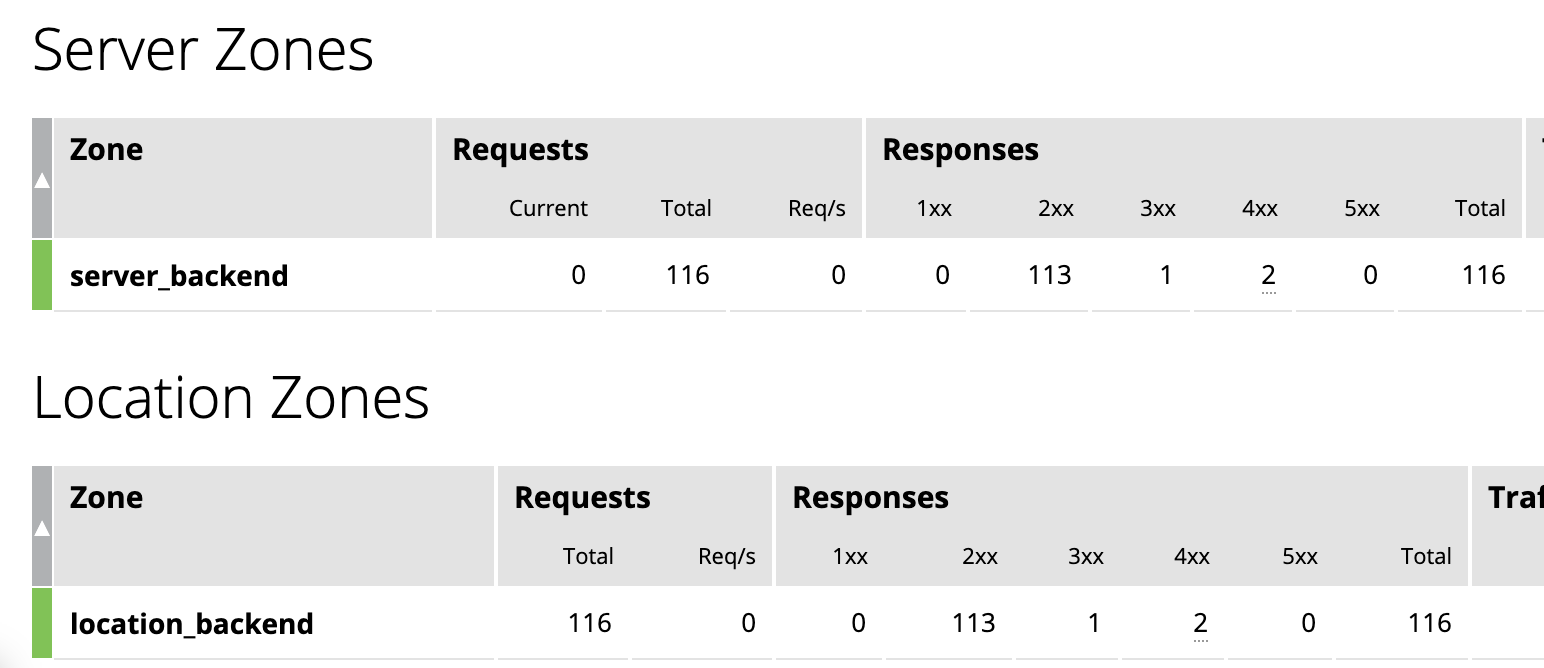

Dashboard 上查看统计数据

|

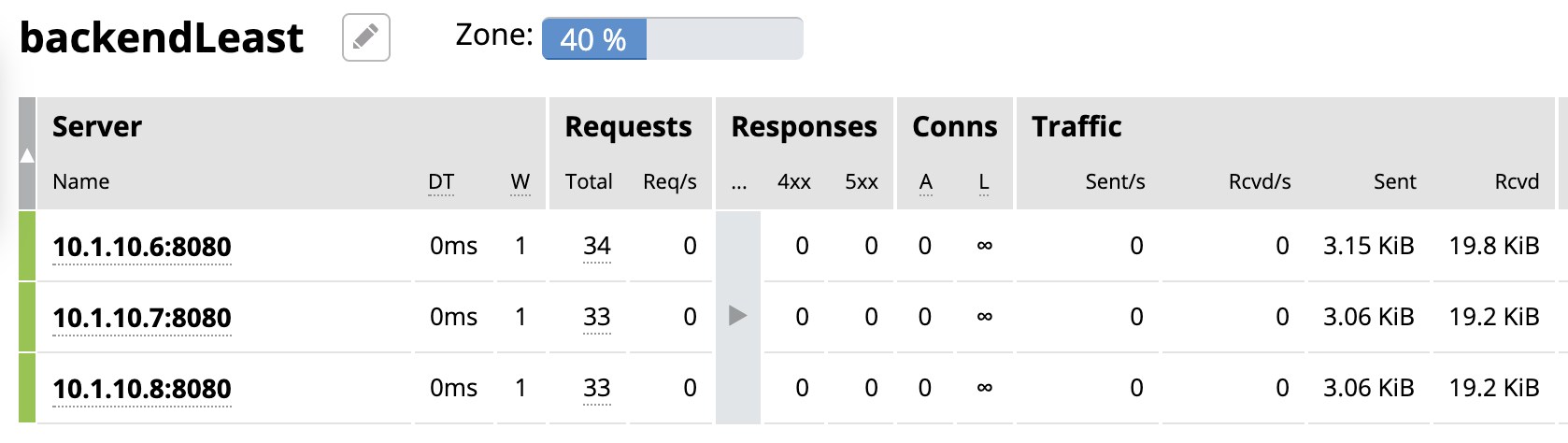

最小连接数负载算法

| ITEM | NOTE |

|---|---|

目的 |

Nginx Plus 支持最小连接数的调度算法 |

步骤 |

新建配置文件 least.conf

重新加载

|

结果 |

命令行访问测试

Dashboard 上查看统计数据

|

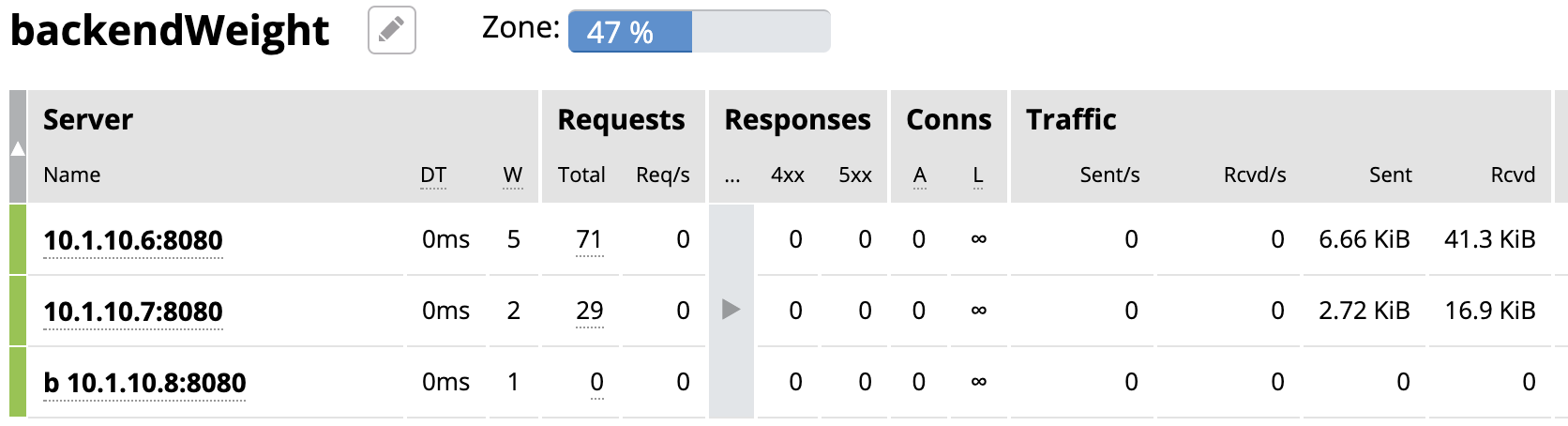

权重与优先级负载算法

| ITEM | NOTE |

|---|---|

目的 |

Nginx Plus 支持权重优先级的调度算法 |

步骤 |

新建配置文件 weight.conf

重新加载

|

结果 |

命令行访问测试

Dashboard 上查看统计数据

|

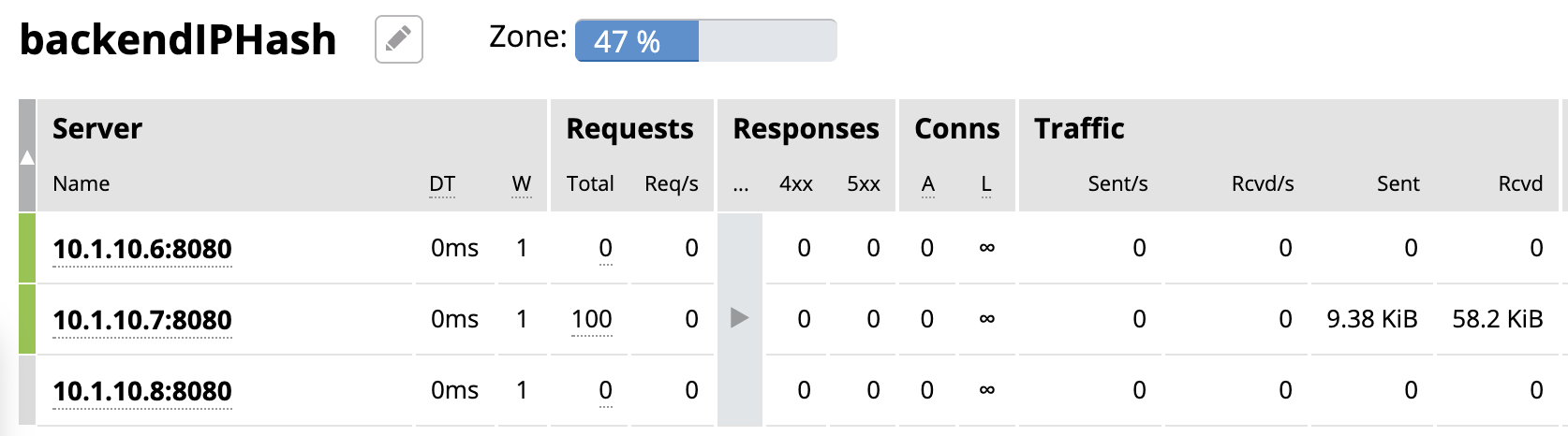

IP 哈希负载算法

| ITEM | NOTE |

|---|---|

目的 |

Nginx Plus 支持 IP 哈希调度算法 |

步骤 |

新建配置文件 iphash.conf

重新加载

|

结果 |

命令行访问测试

Dashboard 上查看统计数据

|

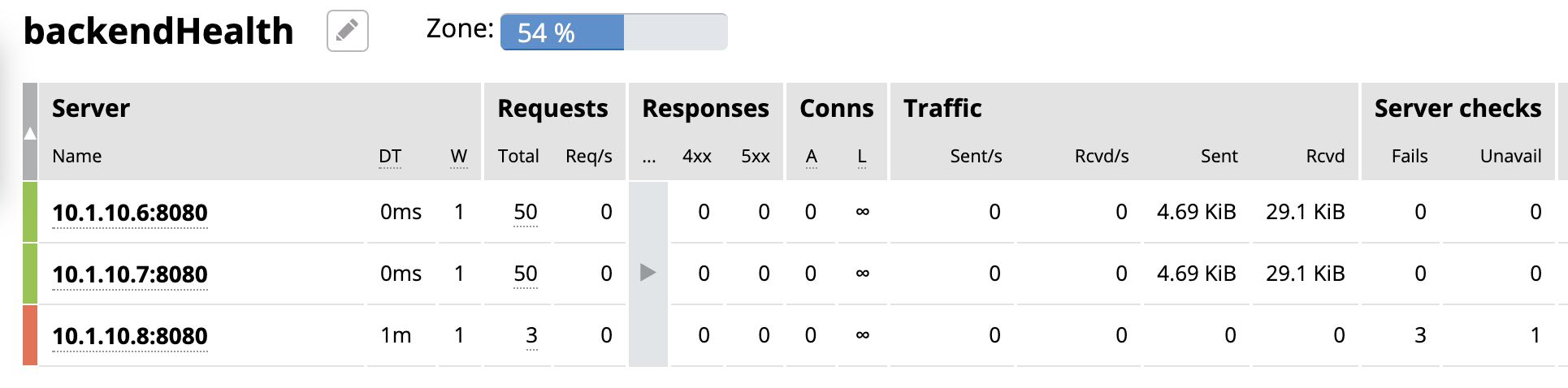

被动健康检查

| ITEM | NOTE |

|---|---|

目的 |

Nginx Plus 支持被动的健康检查 |

步骤 |

新建配置文件 health.conf

重新加载

关闭服务 10.1.10.8:8080 |

结果 |

命令行访问测试

Dashboard 上查看统计数据

|

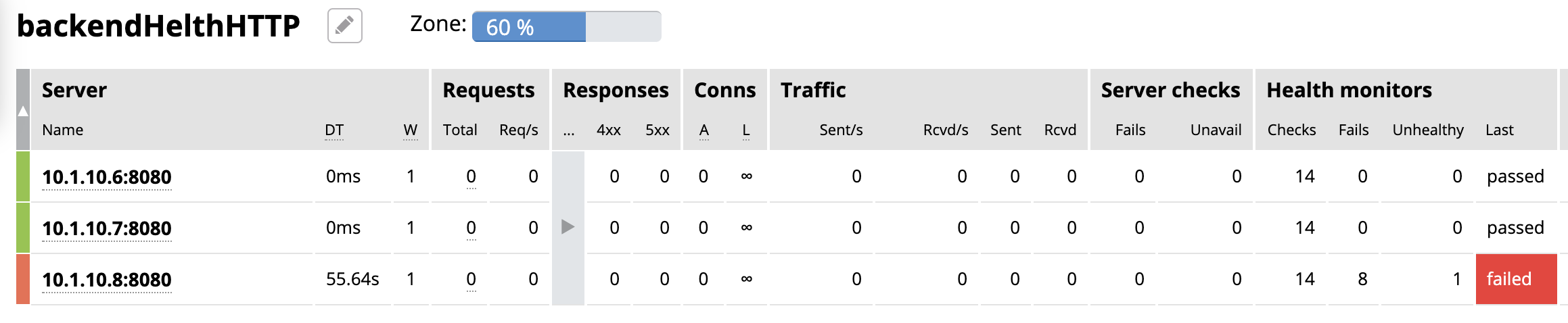

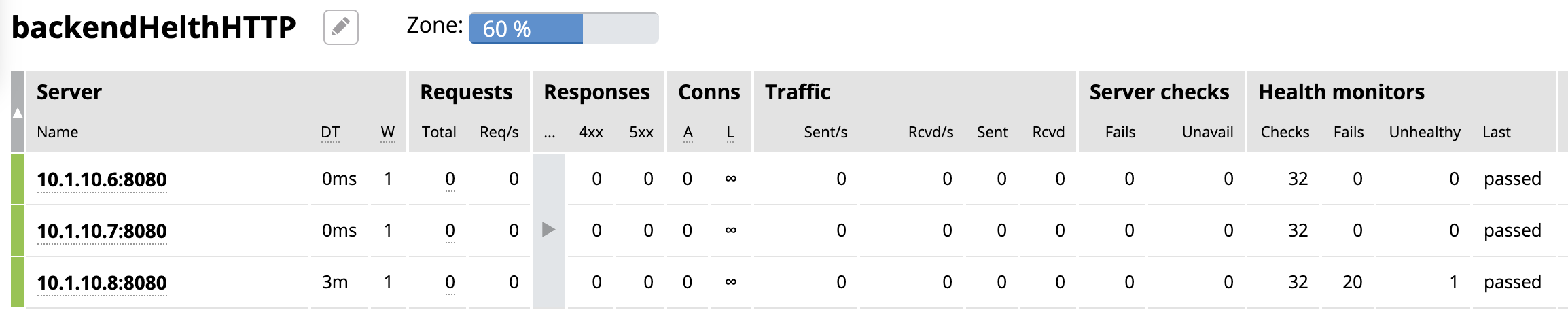

基于 HTTP 的主动健康检查

| ITEM | NOTE |

|---|---|

目的 |

Nginx Plus 支持基于 HTTP 的主动健康检查 |

步骤 |

新建配置文件 healthHTTP.conf

重新加载

关闭服务 10.1.10.8:8080 |

结果 |

|

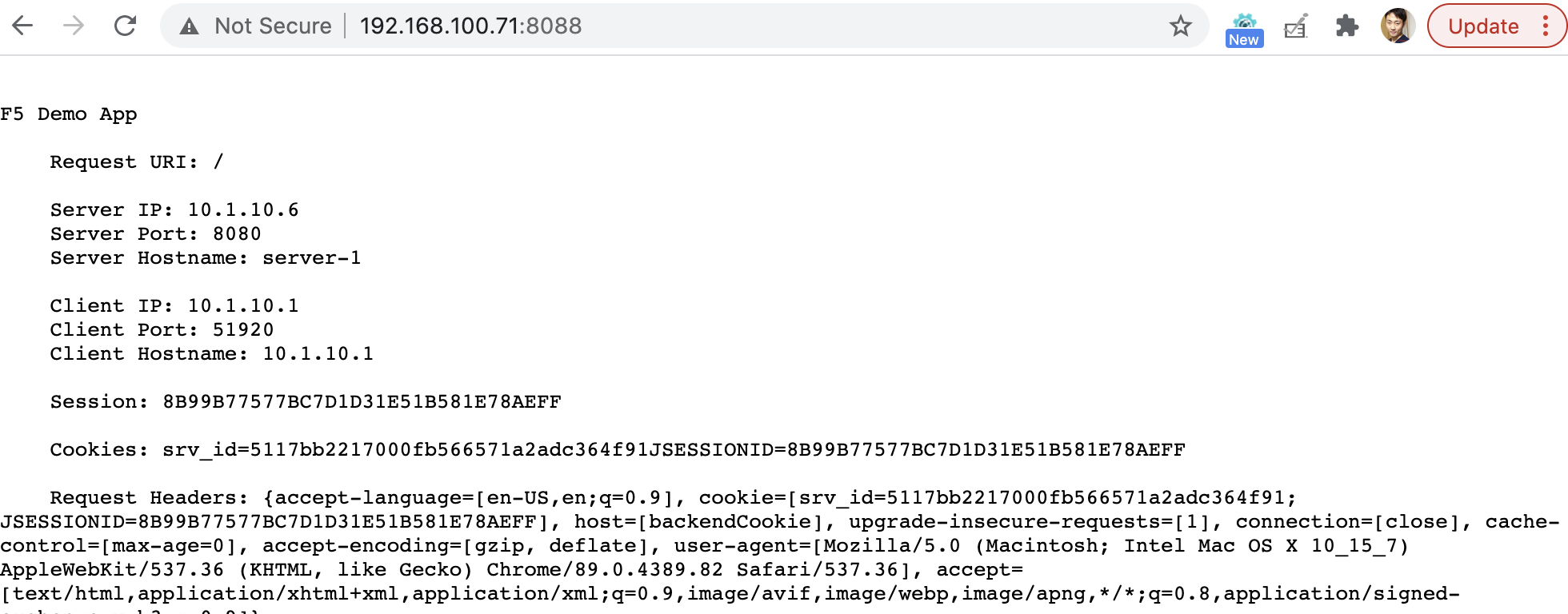

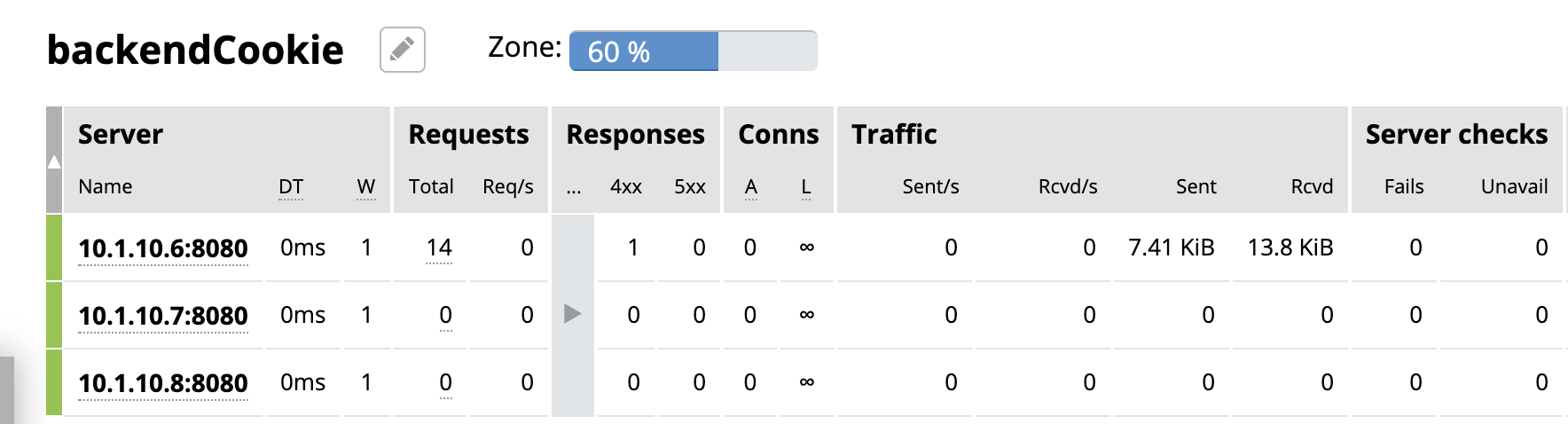

基于 Cookie 的会话保持

| ITEM | NOTE |

|---|---|

目的 |

Nginx Plus 支持基于 Cookie 的会话保持 |

步骤 |

新建配置文件 persisCookie.conf

重新加载

|

结果 |

|

不同类型应用的负载均衡

| ITEM | NOTE |

|---|---|

目的 |

Nginx Plug 对不同应用负载均衡的能力。 |

L7 |

L7 配置

重新加载

访问测试

|

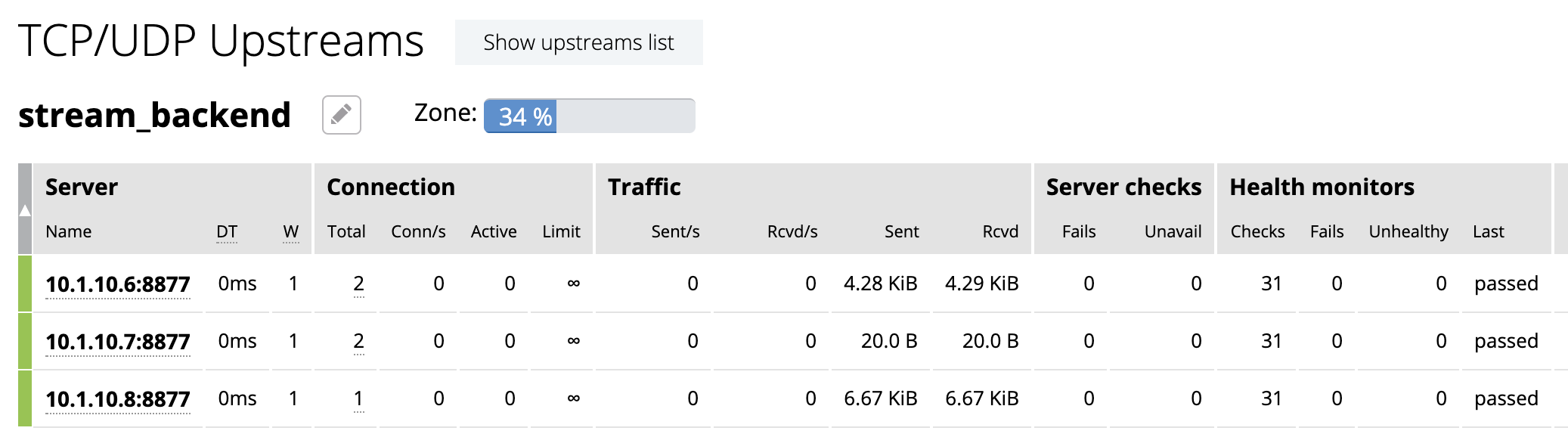

L4 |

准备echoserver 应用 参照 https://github.com/kylinsoong/networks/tree/master/echo,编译出 拷贝 echoserver 到三台服务器,异常启动 4 层应用,启动后监听在 8877 端口

配置文件

测试

统计结果

|

gRPC |

配置 grpc_gateway.conf

log_format grpc_json escape=json '{"timestamp":"$time_iso8601","client":"$remote_addr",'

'"uri":"$uri","http-status":$status,'

'"grpc-status":$grpc_status,"upstream":"$upstream_addr"'

'"rx-bytes":$request_length,"tx-bytes":$bytes_sent}';

map $upstream_trailer_grpc_status $grpc_status {

default $upstream_trailer_grpc_status; # We normally expect to receive grpc-status as a trailer

'' $sent_http_grpc_status; # Else use the header, regardless of who generated it

}

server {

listen 50051 http2; # In production, comment out to disable plaintext port

access_log /var/log/nginx/grpc_log.json grpc_json;

# Routing

location /helloworld. {

status_zone location_backend;

grpc_pass grpc://helloworld_service;

}

# Error responses

include conf.d/errors.grpc_conf; # gRPC-compliant error responses

default_type application/grpc; # Ensure gRPC for all error responses

}

# Backend gRPC servers

#

upstream helloworld_service {

zone helloworld_service 64k;

server 10.1.10.6:50051;

server 10.1.10.7:50051;

server 10.1.10.8:50051;

}

配置errors.grpc_conf

测试

|

内容缓存

| ITEM | NOTE |

|---|---|

目的 |

Nginx Plug 内容缓存能力 |

步骤 |

新建 cache.conf 文件

重新加载

|

结果 |

性能优化

OS 资源限制调试

本部说明 Nginx 运行所依赖 OS 资源限制调试。

# lscpu | grep CPU

CPU op-mode(s): 32-bit, 64-bit

CPU(s): 2

On-line CPU(s) list: 0,1

CPU family: 6

Model name: Intel(R) Xeon(R) CPU E5-2680 0 @ 2.70GHz

CPU MHz: 2699.999

NUMA node0 CPU(s): 0,1# ps -ef | grep nginx

root 955 1 0 Mar29 ? 00:00:00 nginx: master process /usr/sbin/nginx -c /etc/nginx/nginx.conf

nginx 4025 955 6 09:56 ? 00:02:07 nginx: worker process

nginx 4026 955 6 09:56 ? 00:02:07 nginx: worker process

nginx 4027 955 0 09:56 ? 00:00:00 nginx: cache manager processfor limit in fsize cpu as memlock

do

grep "nginx" /etc/security/limits.conf | grep -q $limit || echo -e "nginx hard $limit unlimited\nnginx soft $limit unlimited" | sudo tee --append /etc/security/limits.conf

done

for limit in nofile noproc

do

grep "nginx" /etc/security/limits.conf | grep -q $limit || echo -e "nginx hard $limit 64000\nnginx soft $limit 64000" | sudo tee --append /etc/security/limits.conf

donegrep "file-max" /etc/sysctl.conf || echo -e "fs.file-max = 70000" | tee --append /etc/sysctl.confulimit -n

lsof -p <PID> | wc -l

lsof -p <PID>

ls -l /proc/<PID>/fd | wc -lls /var/log/nginx/Nginx 性能调优一般步骤

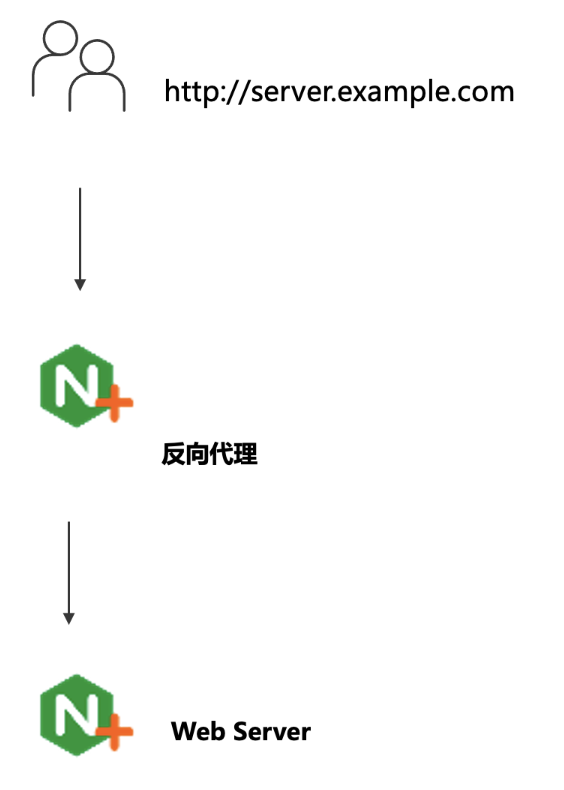

本部分验证性能调优的一般步骤,以及不同步骤对 Nginx 性能的影响。

验证拓扑如下:

-

客户端:wrk软件

-

反向代理:性能优化对象

-

Web端:NGINX

以上所有节点规格都是 2C 4G。

| ITEM | NOTE |

|---|---|

默认配置性能 |

配置备份

默认配置文件 /etc/nginx/nginx.conf

Server 配置

WRK 结果

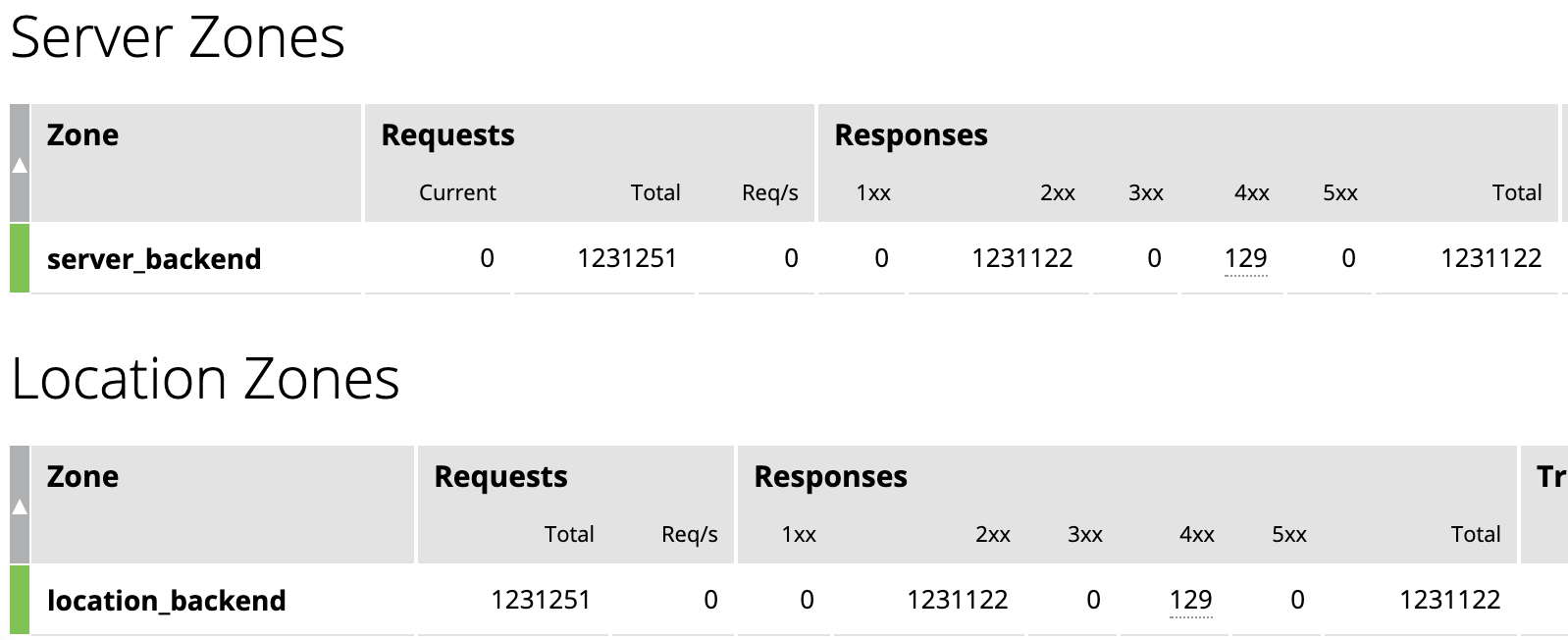

Dashboard UI 上统计信息

|

优化连接数限制 |

修改 worker_connections 从默认 1024 到 10000

WRK 测试结果

|

优化 worker 数量 |

修改 worker_processes 从 1 到 auto

WRK 测试结果

|

连接复用 |

配置连接复用

WRK 测试结果

|

CPU亲和及worker优先级 |

配置

WRK 测试结果

|

日志缓存 |

配置

WRK 测试结果

|

Cache |

配置

WRK 测试结果

|

gzip压缩 |

配置

WRK 测试结果

|

优化CPU开销 |

配置

|

Nginx 日志

本部说明 Nginx 日志。

| ITEM | NOTE |

|---|---|

日志级别 |

Nginx 支持的日志级别

默认Nginx开启的日志级别为notice。 设定 Nginx 日志级别为 debug

|

Access 日志 |

格式

完整变量列表参照:http://nginx.org/en/docs/varindex.html 日志输出

|

IP 和端口调试 |

配置

日志输出

|

TODO

| ITEM | NOTE |

|---|---|

目的 |

|

步骤 |

|

结果 |

| ITEM | NOTE |

|---|---|

目的 |

|

步骤 |

|

结果 |

| ITEM | NOTE |

|---|---|

目的 |

|

步骤 |

|

结果 |

| ITEM | NOTE |

|---|---|

目的 |

|

步骤 |

|

结果 |

| ITEM | NOTE |

|---|---|

目的 |

|

步骤 |

|

结果 |

| ITEM | NOTE |

|---|---|

目的 |

|

步骤 |

|

结果 |